Lung-and-colon-cancer-Classification2 /

dens-paper-code.ipynb

dens-paper-code.ipynb

import os

import time

import shutil

import pathlib

import itertools

from PIL import Image

# Import data handling tools

import cv2

import numpy as np

import pandas as pd

import seaborn as sns

sns.set_style('darkgrid')

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report, precision_score, recall_score, f1_score

# Import deep learning libraries

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.optimizers import Adam, Adamax

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense, Activation, Dropout, BatchNormalization, GlobalAveragePooling2D

from tensorflow.keras import regularizers

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping, ReduceLROnPlateau

from tensorflow.keras.applications import DenseNet121,InceptionV3

from tensorflow.keras.models import Model

# Ignore warnings

import warnings

warnings.filterwarnings("ignore")

print('Modules loaded')

# Generate data paths with labels

data_dir = '/kaggle/input/lung-and-colon-cancer-histopathological-images/lung_colon_image_set'

filepaths = []

labels = []

folds = os.listdir(data_dir)

# Generate paths and labels

for fold in folds:

foldpath = os.path.join(data_dir, fold)

flist = os.listdir(foldpath)

for f in flist:

f_path = os.path.join(foldpath, f)

filelist = os.listdir(f_path)

for file in filelist:

fpath = os.path.join(f_path, file)

filepaths.append(fpath)

if f == 'colon_aca':

labels.append('Colon Adenocarcinoma')

elif f == 'colon_n':

labels.append('Colon Benign Tissue')

elif f == 'lung_aca':

labels.append('Lung Adenocarcinoma')

elif f == 'lung_n':

labels.append('Lung Benign Tissue')

elif f == 'lung_scc':

labels.append('Lung Squamous Cell Carcinoma')

# Concatenate data paths with labels into a DataFrame

df = pd.DataFrame({'filepaths': filepaths, 'labels': labels})

# Split dataset into train, validation, and test sets

train_df, temp_df = train_test_split(df, train_size=0.8, stratify=df['labels'], random_state=42)

valid_df, test_df = train_test_split(temp_df, train_size=0.5, stratify=temp_df['labels'], random_state=42)

# Define image size, channels, and batch size

batch_size = 64

img_size = (224, 224)

channels = 3

img_shape = (img_size[0], img_size[1], channels)

# Create ImageDataGenerator for training and validation

train_datagen = ImageDataGenerator()

valid_datagen = ImageDataGenerator()

train_gen = train_datagen.flow_from_dataframe(train_df, x_col='filepaths', y_col='labels',

target_size=img_size, class_mode='categorical',

batch_size=batch_size, shuffle=True)

valid_gen = valid_datagen.flow_from_dataframe(valid_df, x_col='filepaths', y_col='labels',

target_size=img_size, class_mode='categorical',

batch_size=batch_size, shuffle=True)

test_gen = valid_datagen.flow_from_dataframe(test_df, x_col='filepaths', y_col='labels',

target_size=img_size, class_mode='categorical',

batch_size=batch_size, shuffle=False)

# Get class names

num_classes = len(train_gen.class_indices)

# Define the model

#base_model = DenseNet121(input_shape=img_shape, include_top=False, weights='imagenet')

#base_model = InceptionV3(input_shape=img_shape, include_top=False, weights='imagenet')

base_model = DenseNet121(input_shape=img_shape, include_top=False, weights='imagenet')

base_model.trainable = True

x = base_model.output

x = GlobalAveragePooling2D()(x)

x = Dense(256, activation='relu')(x)

predictions = Dense(num_classes, activation='softmax')(x)

model_DenseNet = Model(inputs=base_model.input, outputs=predictions)

# Compile the model

model_DenseNet.compile(optimizer=Adamax(learning_rate=0.001), loss='categorical_crossentropy', metrics=['accuracy'])

# Define callbacks

callbacks = [

ModelCheckpoint(filepath='best_model.keras', monitor='val_loss', save_best_only=True, verbose=1),

EarlyStopping(monitor='val_loss', patience=5, restore_best_weights=True, verbose=1),

ReduceLROnPlateau(monitor='val_loss', factor=0.2, patience=3, min_lr=1e-6, verbose=1)

]

# Helper function to calculate metrics

def calculate_metrics(generator, model):

preds = model.predict(generator)

y_true = generator.classes

y_pred = np.argmax(preds, axis=1)

precision = precision_score(y_true, y_pred, average='weighted')

recall = recall_score(y_true, y_pred, average='weighted')

f1 = f1_score(y_true, y_pred, average='weighted')

return precision, recall, f1

# Train the model and calculate metrics for each epoch

class MetricsCallback(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs=None):

# Training metrics

train_precision, train_recall, train_f1 = calculate_metrics(train_gen, self.model)

print(f'Epoch {epoch+1} Training Precision: {train_precision:.4f}, Recall: {train_recall:.4f}, F1 Score: {train_f1:.4f}')

# Validation metrics

val_precision, val_recall, val_f1 = calculate_metrics(valid_gen, self.model)

print(f'Epoch {epoch+1} Validation Precision: {val_precision:.4f}, Recall: {val_recall:.4f}, F1 Score: {val_f1:.4f}')

# Measure training time

start_time = time.time()

# Train the model with the custom metrics callback

history = model_DenseNet.fit(train_gen, validation_data=valid_gen, epochs=20, callbacks=[MetricsCallback()] + callbacks)

end_time = time.time()

training_time = end_time - start_time

print(f'Total Training Time: {training_time:.2f} seconds')

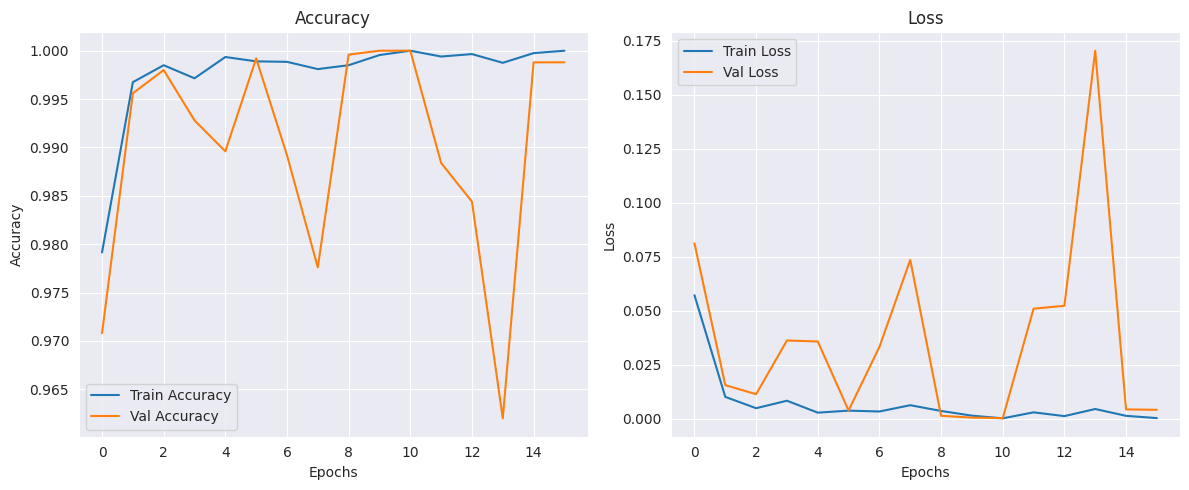

# Plot training history (accuracy and loss)

plt.figure(figsize=(12, 5))

# Plot accuracy

plt.subplot(1, 2, 1)

plt.plot(history.history['accuracy'], label='Train Accuracy')

plt.plot(history.history['val_accuracy'], label='Val Accuracy')

plt.title('Accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

# Plot loss

plt.subplot(1, 2, 2)

plt.plot(history.history['loss'], label='Train Loss')

plt.plot(history.history['val_loss'], label='Val Loss')

plt.title('Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.tight_layout()

plt.show()

# Measure testing time

start_time = time.time()

# Evaluate on the test set

test_loss, test_acc = model_DenseNet.evaluate(test_gen)

end_time = time.time()

testing_time = end_time - start_time

print(f'Test Accuracy: {test_acc:.4f}')

print(f'Total Testing Time: {testing_time:.2f} seconds')

# Final metrics on the test set

test_precision, test_recall, test_f1 = calculate_metrics(test_gen, model_DenseNet)

print(f'Test Precision: {test_precision:.4f}, Recall: {test_recall:.4f}, F1 Score: {test_f1:.4f}')

Modules loaded

Found 20000 validated image filenames belonging to 5 classes.

Found 2500 validated image filenames belonging to 5 classes.

Found 2500 validated image filenames belonging to 5 classes.

Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/densenet/densenet121_weights_tf_dim_ordering_tf_kernels_notop.h5

[1m29084464/29084464[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m2s[0m 0us/step

Epoch 1/20

WARNING: All log messages before absl::InitializeLog() is called are written to STDERR

I0000 00:00:1727877429.788395 66 service.cc:145] XLA service 0x7ee670004570 initialized for platform CUDA (this does not guarantee that XLA will be used). Devices:

I0000 00:00:1727877429.788469 66 service.cc:153] StreamExecutor device (0): Tesla P100-PCIE-16GB, Compute Capability 6.0

I0000 00:00:1727877519.057475 66 device_compiler.h:188] Compiled cluster using XLA! This line is logged at most once for the lifetime of the process.

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m126s[0m 376ms/step

Epoch 1 Training Precision: 0.2049, Recall: 0.2047, F1 Score: 0.2045

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m18s[0m 443ms/step

Epoch 1 Validation Precision: 0.2066, Recall: 0.2064, F1 Score: 0.2061

Epoch 1: val_loss improved from inf to 0.08119, saving model to best_model.keras

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m627s[0m 2s/step - accuracy: 0.9469 - loss: 0.1441 - val_accuracy: 0.9708 - val_loss: 0.0812 - learning_rate: 0.0010

Epoch 2/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m101s[0m 322ms/step

Epoch 2 Training Precision: 0.1954, Recall: 0.1955, F1 Score: 0.1954

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 321ms/step

Epoch 2 Validation Precision: 0.1956, Recall: 0.1956, F1 Score: 0.1956

Epoch 2: val_loss improved from 0.08119 to 0.01547, saving model to best_model.keras

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m241s[0m 762ms/step - accuracy: 0.9978 - loss: 0.0084 - val_accuracy: 0.9956 - val_loss: 0.0155 - learning_rate: 0.0010

Epoch 3/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m97s[0m 311ms/step

Epoch 3 Training Precision: 0.1980, Recall: 0.1980, F1 Score: 0.1980

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m12s[0m 308ms/step

Epoch 3 Validation Precision: 0.1932, Recall: 0.1932, F1 Score: 0.1932

Epoch 3: val_loss improved from 0.01547 to 0.01127, saving model to best_model.keras

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m234s[0m 741ms/step - accuracy: 0.9977 - loss: 0.0078 - val_accuracy: 0.9980 - val_loss: 0.0113 - learning_rate: 0.0010

Epoch 4/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m102s[0m 327ms/step

Epoch 4 Training Precision: 0.2038, Recall: 0.2037, F1 Score: 0.2038

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m14s[0m 356ms/step

Epoch 4 Validation Precision: 0.1916, Recall: 0.1916, F1 Score: 0.1916

Epoch 4: val_loss did not improve from 0.01127

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m238s[0m 755ms/step - accuracy: 0.9977 - loss: 0.0069 - val_accuracy: 0.9928 - val_loss: 0.0362 - learning_rate: 0.0010

Epoch 5/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m102s[0m 327ms/step

Epoch 5 Training Precision: 0.1990, Recall: 0.1990, F1 Score: 0.1990

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 316ms/step

Epoch 5 Validation Precision: 0.2018, Recall: 0.2020, F1 Score: 0.2019

Epoch 5: val_loss did not improve from 0.01127

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m241s[0m 762ms/step - accuracy: 0.9990 - loss: 0.0035 - val_accuracy: 0.9896 - val_loss: 0.0357 - learning_rate: 0.0010

Epoch 6/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m99s[0m 317ms/step

Epoch 6 Training Precision: 0.2038, Recall: 0.2038, F1 Score: 0.2038

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 335ms/step

Epoch 6 Validation Precision: 0.2064, Recall: 0.2064, F1 Score: 0.2064

Epoch 6: val_loss improved from 0.01127 to 0.00370, saving model to best_model.keras

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m239s[0m 757ms/step - accuracy: 0.9991 - loss: 0.0036 - val_accuracy: 0.9992 - val_loss: 0.0037 - learning_rate: 0.0010

Epoch 7/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m102s[0m 325ms/step

Epoch 7 Training Precision: 0.1999, Recall: 0.1998, F1 Score: 0.1999

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 320ms/step

Epoch 7 Validation Precision: 0.2075, Recall: 0.2076, F1 Score: 0.2075

Epoch 7: val_loss did not improve from 0.00370

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m239s[0m 758ms/step - accuracy: 0.9994 - loss: 0.0016 - val_accuracy: 0.9892 - val_loss: 0.0332 - learning_rate: 0.0010

Epoch 8/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m103s[0m 328ms/step

Epoch 8 Training Precision: 0.2001, Recall: 0.2001, F1 Score: 0.2000

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 330ms/step

Epoch 8 Validation Precision: 0.2047, Recall: 0.2052, F1 Score: 0.2048

Epoch 8: val_loss did not improve from 0.00370

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m238s[0m 754ms/step - accuracy: 0.9973 - loss: 0.0082 - val_accuracy: 0.9776 - val_loss: 0.0735 - learning_rate: 0.0010

Epoch 9/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m99s[0m 316ms/step

Epoch 9 Training Precision: 0.2058, Recall: 0.2058, F1 Score: 0.2058

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 332ms/step

Epoch 9 Validation Precision: 0.2040, Recall: 0.2040, F1 Score: 0.2040

Epoch 9: val_loss improved from 0.00370 to 0.00127, saving model to best_model.keras

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m236s[0m 747ms/step - accuracy: 0.9982 - loss: 0.0044 - val_accuracy: 0.9996 - val_loss: 0.0013 - learning_rate: 0.0010

Epoch 10/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m102s[0m 326ms/step

Epoch 10 Training Precision: 0.1983, Recall: 0.1983, F1 Score: 0.1983

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 333ms/step

Epoch 10 Validation Precision: 0.2008, Recall: 0.2008, F1 Score: 0.2008

Epoch 10: val_loss improved from 0.00127 to 0.00041, saving model to best_model.keras

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m239s[0m 756ms/step - accuracy: 0.9993 - loss: 0.0019 - val_accuracy: 1.0000 - val_loss: 4.1266e-04 - learning_rate: 0.0010

Epoch 11/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m101s[0m 321ms/step

Epoch 11 Training Precision: 0.1967, Recall: 0.1967, F1 Score: 0.1967

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 330ms/step

Epoch 11 Validation Precision: 0.2072, Recall: 0.2072, F1 Score: 0.2072

Epoch 11: val_loss improved from 0.00041 to 0.00016, saving model to best_model.keras

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m245s[0m 775ms/step - accuracy: 1.0000 - loss: 7.3654e-05 - val_accuracy: 1.0000 - val_loss: 1.6086e-04 - learning_rate: 0.0010

Epoch 12/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m100s[0m 320ms/step

Epoch 12 Training Precision: 0.1973, Recall: 0.1973, F1 Score: 0.1973

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 322ms/step

Epoch 12 Validation Precision: 0.2107, Recall: 0.2108, F1 Score: 0.2107

Epoch 12: val_loss did not improve from 0.00016

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m234s[0m 743ms/step - accuracy: 0.9996 - loss: 0.0021 - val_accuracy: 0.9884 - val_loss: 0.0509 - learning_rate: 0.0010

Epoch 13/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m101s[0m 322ms/step

Epoch 13 Training Precision: 0.2058, Recall: 0.2059, F1 Score: 0.2058

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m14s[0m 340ms/step

Epoch 13 Validation Precision: 0.1966, Recall: 0.1968, F1 Score: 0.1966

Epoch 13: val_loss did not improve from 0.00016

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m239s[0m 755ms/step - accuracy: 0.9997 - loss: 6.9319e-04 - val_accuracy: 0.9844 - val_loss: 0.0522 - learning_rate: 0.0010

Epoch 14/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m100s[0m 319ms/step

Epoch 14 Training Precision: 0.2015, Recall: 0.2017, F1 Score: 0.2015

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m14s[0m 337ms/step

Epoch 14 Validation Precision: 0.2111, Recall: 0.2112, F1 Score: 0.2110

Epoch 14: val_loss did not improve from 0.00016

Epoch 14: ReduceLROnPlateau reducing learning rate to 0.00020000000949949026.

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m237s[0m 750ms/step - accuracy: 0.9987 - loss: 0.0037 - val_accuracy: 0.9620 - val_loss: 0.1705 - learning_rate: 0.0010

Epoch 15/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m101s[0m 324ms/step

Epoch 15 Training Precision: 0.2011, Recall: 0.2011, F1 Score: 0.2011

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 330ms/step

Epoch 15 Validation Precision: 0.2024, Recall: 0.2024, F1 Score: 0.2024

Epoch 15: val_loss did not improve from 0.00016

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m236s[0m 749ms/step - accuracy: 0.9995 - loss: 0.0023 - val_accuracy: 0.9988 - val_loss: 0.0042 - learning_rate: 2.0000e-04

Epoch 16/20

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m99s[0m 315ms/step

Epoch 16 Training Precision: 0.1997, Recall: 0.1997, F1 Score: 0.1997

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 317ms/step

Epoch 16 Validation Precision: 0.1920, Recall: 0.1920, F1 Score: 0.1920

Epoch 16: val_loss did not improve from 0.00016

[1m313/313[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m233s[0m 738ms/step - accuracy: 1.0000 - loss: 1.9201e-04 - val_accuracy: 0.9988 - val_loss: 0.0040 - learning_rate: 2.0000e-04

Epoch 16: early stopping

Restoring model weights from the end of the best epoch: 11.

Total Training Time: 4199.01 seconds

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m27s[0m 692ms/step - accuracy: 1.0000 - loss: 2.4379e-04

Test Accuracy: 1.0000

Total Testing Time: 28.88 seconds

[1m40/40[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m12s[0m 311ms/step

Test Precision: 1.0000, Recall: 1.0000, F1 Score: 1.0000